The Evolution of Website Monitoring: From Basic Uptime Checks to Advanced User Experience Analytics

Over years, website monitoring has evolved from basic uptime checks to the current advanced analytical methods. During the old days, admin and webmasters had to perform a manual test on the website to determine if it was working properly. The circumstances now are quite different. On the contrary, website monitoring today has become easier than ever before.

And as technology and customer expectations keep evolving, the need for modern tools would only increase. Many institutions and clients are looking for better solutions that would save their time and minimize downtime. In this blog, we have analyzed the process of evolution of website monitoring and suggest some of the latest tools that one can effectively use to monitor the website.

The Early Days: Uptime and Basic Performance Checks

The process of website monitoring came to the light in the early 1990s when business began to use websites. However, during this time uptime had been deemed the main need. Still, in this period companies generally assumed that a particular website should be functional every day.

During this era, tools like Nagios and a simple Pingdom were launched. These tools played a crucial role in generating HTTP or ICMP (ping) requests on an intermittent basis or when a website experienced an issue. First and foremost, the method called for an email or text notice in case there’s an issue with the ping. That explains why the downtime happened or for how long it was experienced.

After the uptime monitoring method proved ineffective, load testing tools emerged. These tools let the daily traffic simulators operate as actual constructors. Essentially, by means of such a simulation, the system deduces the maximum number of concurrent users a website should be able to support before it starts to lose performance and acceptance. Thus, it was a kind of “ascension” from just tracking uptime to at least performance measurement-only under controlled circumstances-and that also prior to deployment.

Synthetic Monitoring and Real User Monitoring Emergence

After some time, there’s need for zero downtime and older methods had downsides. This led to the invention of new methods which proved better. One of the new methods was synthetic monitoring. This method looked at how programs would operate in theory. By having this information, webmasters would be able to design flawless websites, and thus minimize downtimes.

This method, also known as transaction monitoring, takes a more aggressive approach. Instead of passively allowing end users to discover problems, the synthetic tests would imitate user actions including logging in, searching for products, or finishing checkout tasks. From loading issues to geolocations, these simulations would assist find local failures or latency problems.

Somehow this method proved effective in that even in strange midnight hours, synthetic monitoring enables companies to evaluate their performance and confirm that important processes are running smoothly. For international businesses seeking local performance differences, it has shown to be a sought possession.

The other method, real user monitoring, revealed insights into how real users could interact. RUM(Real user monitoring) gathers information from every site user, assessing characteristics like load time, TTFB (time to first byte), FCP (First Contentful Paint), and bounce rates. Developers and website owners would then use this information to improve the performance of the websites.

Above all, this shift showed the evolution of the conventional approach to completing things. Website monitoring developed from simply finding issues to actively optimizing for user experience. By this time, most of the companies might now tackle critical issues including: How does a device, browser, or location affect performance? Does it influence conversion rates or customer behavior?

The UX Revolution: User-Centric Monitoring

After the RUM method became effective, there was a need to discover how people engaged with a website, not just whether they did. Several methods and tools emerged including Google Analytics and Hotjar. These tools did the session recordings, heatmaps, and click-tracking. The user-centric information generated allowed businesses to find just where consumers lost interest or started to question something.

Thus, in the early 2000s, the user centric monitoring information became vital in CRO (Conversion Rate Optimization) and UX design. In addition, it also guided choices for website architecture and customized content approaches. The findings went beyond IT teams to include marketing, sales, and design divisions.

Next, to improve on the analytic above, Google formally added Core Web Vitals as a ranking element in 2021. These metrics included the Largest Contentful Paint (LCP), First Input Delay (FID), and Cumulative Layout Shift (CLS), which were responsible for measuring and quantifying actual user experience. Sites that fell short of these criteria ran the risk of decreasing search engine visibility. Website monitoring systems rapidly evolved to provide these metrics. Performance was now maintaining organic search traffic in addition to keeping consumers pleased.

Modern Monitoring: AI, Machine Learning, and Predictive Analytics

As from 2015, almost all the businesses turned their heads to websites. Most of these businesses largely relied on websites as the link between them and their customers, and therefore, maintaining zero downtime was necessary. In addition, the volume of data monitored became huge, thus eliminating the old monitoring methods. Modern systems began applying artificial intelligence and machine learning to sift through this data and offer useful insights. Instead of reacting to issues, businesses today might forecast and handle them.

Later, AI tools that could detect traffic variations, forecast server load surges, or propose UX adjustments based on user behavior were introduced. These tools simplified how the monitoring process was done and automated root-cause analysis, hence greatly reducing Mean Time to Resolution (MTTR) during failures.

Integrating Business and Monitoring Metrics

The most sophisticated monitoring systems today combine technical measures with corporate KPIs (Key Performance Indicators). This integration helps companies to know not only how their websites revenue and customer satisfaction performance affect.

For example, what conversion rate drop results from a page taking an extra second to load? Which consumer groups are most impacted by performance problems? These observations advance business plan bridging and IT.

The Future: What Lies Ahead

Website monitoring is set for yet another significant advancement as digital interactions develop. Future developments point to a change in systems toward even more distributed, smart, and privacy-sensitive ones. Here is a more thorough examination of what the next frontier in monitoring calls for and the tools already clearing the path.

1. Tracking in the Age of Edge Computing and IoT

Traditional centralized monitoring systems are inadequate given the fast development of edge computing and the Internet of Things (IoT). To lower latency and increase performance, companies are progressively using applications and services near the user, at the “edge” of the network. This distributed architecture calls for monitoring solutions able to assess real-time performance across millions of devices, sensors, and microservices—all simultaneously.

In fields including smart city infrastructure, autonomous vehicles, and telemedicine, every millisecond matters. Monitoring systems must follow data from edge nodes, find congestion, and quickly activate reaction mechanisms.

2. Analytics and Privacy-First Monitoring

Website monitoring has to change to respect user privacy while still providing useful information as data privacy becomes a non-negotiable need, mainly under rules like the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act (CCPA).

Future-ready monitoring systems have to be developed to securely, anonymously, and under user permission gather and handle just vital information. This change is driving the sector toward privacy-respecting analytics technologies and away from invasive third-party cookies.

3. The emergence of integrated experience platforms

The lines separating monitoring, experimentation, and customization are swiftly blurring. Separate systems for performance tracking, A/B testing, and user experience customizing are no longer desired by companies. Rather, unified platforms are developing to intelligently integrate all these capabilities in one ecosystem.

These integrated experience platforms let teams try UX adjustments, assess effect on performance in real time, and, based on behavior and performance data, dynamically modify user experiences. Agile, data-driven digital environments where customer satisfaction and performance optimization go hand in hand are wanted.

Conclusion

Website monitoring has changed dramatically from straightforward uptime tests to complete systems that assess every aspect of user experience, performance, and business impact. Understanding how consumers engage with your website in real time is mandatory in the rapid digital environment of today. This evolution’s next stage emphasizes predictive insights, edge and Internet of Things monitoring, privacy-first analytics, and unified platforms enabling companies to act on data quickly and smartly.

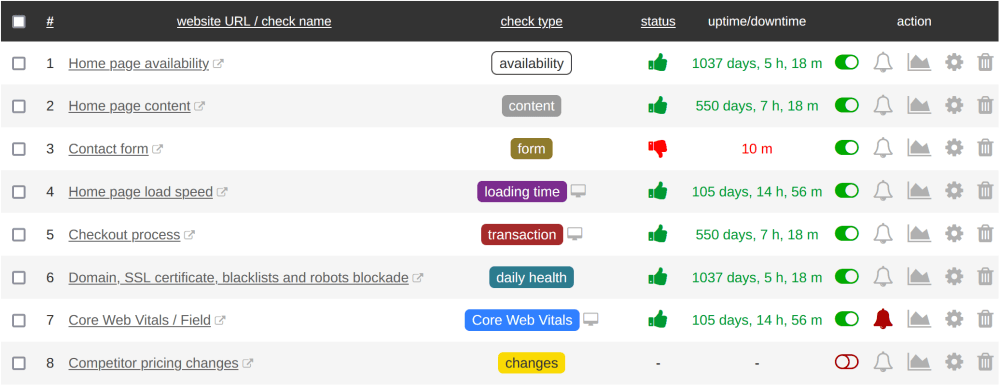

Rising user expectations and advancing technology mean that staying ahead demands the appropriate instruments. That’s when Super Monitoring enters the front stage. Built for today’s digital demands, Super Monitoring offers dependable uptime checks, thorough performance metrics, and simple reports that aid in user journey appreciation and optimization. Whether you run one website or a collection of digital assets, Super Monitoring provides the visibility and control you need to ensure flawless, excellent experiences.

About the Author

Robert Koch – experienced SaaS application designer and business optimization through automation consultant. An avid home brewer and cheesemaker in his spare time.